Building and running Couchbase on FreeBSD

October 2015.

Lets's try to build and run Couchbase on FreeBSD!

The system I'm using here is completely new.

# uname -a

FreeBSD couchbasebsd 10.2-RELEASE FreeBSD 10.2-RELEASE #0 r286666: Wed Aug 12 15:26:37 UTC 2015 root@releng1.nyi.freebsd.org:/usr/obj/usr/src/sys/GENERIC amd64

Fetching the source

Let's download repo, Google's tool to fetch multiple git repositories at once.

# fetch https://storage.googleapis.com/git-repo-downloads/repo -o /root/bin/repo --no-verify-peer

/root/bin/repo 100% of 25 kB 1481 kBps 00m00s

I don't have any certificate bundle installed, so I need

--no-verify-peer to prevent openssl from complaining. In that case I must verify that the file is correct before executing it.

# sha1 /root/bin/repo

SHA1 (/root/bin/repo) = da0514e484f74648a890c0467d61ca415379f791

The list of SHA1s can be found in Android Open Source Project - Downloading the Source .

Make it executable.

# chmod +x /root/bin/repo

Create a directory to work in.

# mkdir couchbase && cd couchbase

I'll be fetching branch 3.1.1, which is the latest release at the time I'm writing this.

# /root/bin/repo init -u git://github.com/couchbase/manifest -m released/3.1.1.xml

env: python: No such file or directory

Told you the system was brand new.

# make -C /usr/ports/lang/python install clean

Let's try again.

# /root/bin/repo init -u git://github.com/couchbase/manifest -m released/3.1.1.xml

fatal: 'git' is not available

fatal: [Errno 2] No such file or directory

Please make sure git is installed and in your path.

Install git:

# make -C /usr/ports/devel/git install clean

Try again:

# /root/bin/repo init -u git://github.com/couchbase/manifest -m released/3.1.1.xml

Traceback (most recent call last):

File "/root/couchbase/.repo/repo/main.py", line 526, in <module>

_Main(sys.argv[1:])

File "/root/couchbase/.repo/repo/main.py", line 502, in _Main

result = repo._Run(argv) or 0

File "/root/couchbase/.repo/repo/main.py", line 175, in _Run

result = cmd.Execute(copts, cargs)

File "/root/couchbase/.repo/repo/subcmds/init.py", line 395, in Execute

self._ConfigureUser()

File "/root/couchbase/.repo/repo/subcmds/init.py", line 289, in _ConfigureUser

name = self._Prompt('Your Name', mp.UserName)

File "/root/couchbase/.repo/repo/project.py", line 703, in UserName

self._LoadUserIdentity()

File "/root/couchbase/.repo/repo/project.py", line 716, in _LoadUserIdentity

u = self.bare_git.var('GIT_COMMITTER_IDENT')

File "/root/couchbase/.repo/repo/project.py", line 2644, in runner

p.stderr))

error.GitError: manifests var:

*** Please tell me who you are.

Run

git config --global user.email "you@example.com"

git config --global user.name "Your Name"

to set your account's default identity.

Omit --global to set the identity only in this repository.

fatal: unable to auto-detect email address (got 'root@couchbasebsd.(none)')

Configure your git information:

# git config --global user.email "you@example.com"

# git config --global user.name "Your Name"

Try again:

# /root/bin/repo init -u git://github.com/couchbase/manifest -m released/3.1.1.xml

Your identity is: Your Name <you@example.com>

If you want to change this, please re-run 'repo init' with --config-name

[...]

repo has been initialized in /root/couchbase

Repo was initialized successfully. Let's sync!

# repo sync

[...]

Fetching projects: 100% (25/25), done.

Checking out files: 100% (2988/2988), done.ut files: 21% (641/2988)

Checking out files: 100% (11107/11107), done. files: 2% (236/11107)

Checking out files: 100% (3339/3339), done.ut files: 11% (379/3339)

Checking out files: 100% (1256/1256), done.ut files: 48% (608/1256)

Checking out files: 100% (4298/4298), done.ut files: 0% (27/4298)

Syncing work tree: 100% (25/25), done.

We now have our source environment setup.

Building

Let's invoke the makefile.

# gmake

(cd build && cmake -G "Unix Makefiles" -D CMAKE_INSTALL_PREFIX="/root/couchbase/install" -D CMAKE_PREFIX_PATH=";/root/couchbase/install" -D PRODUCT_VERSION= -D BUILD_ENTERPRISE= -D CMAKE_BUILD_TYPE=Debug ..)

cmake: not found

Makefile:42: recipe for target 'build/Makefile' failed

gmake[1]: *** [build/Makefile] Error 127

GNUmakefile:5: recipe for target 'all' failed

gmake: *** [all] Error 2

CMake is missing? Let's install it.

# make -C /usr/ports/devel/cmake install clean

Let's try again...

# gmake

CMake Error at tlm/cmake/Modules/FindCouchbaseTcMalloc.cmake:38 (MESSAGE):

Can not find tcmalloc. Exiting.

Call Stack (most recent call first):

tlm/cmake/Modules/CouchbaseMemoryAllocator.cmake:3 (INCLUDE)

tlm/cmake/Modules/CouchbaseSingleModuleBuild.cmake:11 (INCLUDE)

CMakeLists.txt:12 (INCLUDE)

-- Configuring incomplete, errors occurred!

See also "/root/couchbase/build/CMakeFiles/CMakeOutput.log".

Makefile:42: recipe for target 'build/Makefile' failed

gmake[1]: *** [build/Makefile] Error 1

GNUmakefile:5: recipe for target 'all' failed

gmake: *** [all] Error 2

What the hell is the system looking for?

# cat tlm/cmake/Modules/FindCouchbaseTcMalloc.cmake

[...]

FIND_PATH(TCMALLOC_INCLUDE_DIR gperftools/malloc_hook_c.h

PATHS

${_gperftools_exploded}/include)

[...]

Where is that

malloc_hook_c.h?

# grep -R gperftools/malloc_hook_c.h *

gperftools/Makefile.am: src/gperftools/malloc_hook_c.h \

gperftools/Makefile.am: src/gperftools/malloc_hook_c.h \

gperftools/Makefile.am:## src/gperftools/malloc_hook_c.h \

gperftools/src/google/malloc_hook_c.h:#warning "google/malloc_hook_c.h is deprecated. Use gperftools/malloc_hook_c.h instead"

gperftools/src/google/malloc_hook_c.h:#include <gperftools/malloc_hook_c.h>

gperftools/src/gperftools/malloc_hook.h:#include <gperftools/malloc_hook_c.h> // a C version of the malloc_hook interface

gperftools/src/tests/malloc_extension_c_test.c:#include <gperftools/malloc_hook_c.h>

[...]

It's in directory

gperftools. Let's build that module first.

# cd gperftools/

# ./autogen.sh

# ./configure

[...]

config.status: creating Makefile

config.status: creating src/gperftools/tcmalloc.h

config.status: creating src/windows/gperftools/tcmalloc.h

config.status: creating src/config.h

config.status: executing depfiles commands

config.status: executing libtool commands

# make && make install

Let's try to build again.

# cd ..

# make

CMake Error at tlm/cmake/Modules/FindCouchbaseIcu.cmake:108 (MESSAGE):

Can't build Couchbase without ICU

Call Stack (most recent call first):

tlm/cmake/Modules/CouchbaseSingleModuleBuild.cmake:16 (INCLUDE)

CMakeLists.txt:12 (INCLUDE)

ICU is missing? Let's install it.

# make -C /usr/ports/devel/icu install clean

Let's try again.

# make

CMake Error at tlm/cmake/Modules/FindCouchbaseSnappy.cmake:34 (MESSAGE):

Can't build Couchbase without Snappy

Call Stack (most recent call first):

tlm/cmake/Modules/CouchbaseSingleModuleBuild.cmake:17 (INCLUDE)

CMakeLists.txt:12 (INCLUDE)

snappy is missing? Let's install it.

# make -C /usr/ports/archivers/snappy install

Do not make the mistake of installing

multimedia/snappy instead. This is a totally unrelated module, and it will install 175 crappy Linux/X11 dependencies on your system.Let's try again:

# gmake

CMake Error at tlm/cmake/Modules/FindCouchbaseV8.cmake:52 (MESSAGE):

Can't build Couchbase without V8

Call Stack (most recent call first):

tlm/cmake/Modules/CouchbaseSingleModuleBuild.cmake:18 (INCLUDE)

CMakeLists.txt:12 (INCLUDE)

V8 is missing? Let's install it.

# make -C /usr/ports/lang/v8 install clean

Let's try again.

# gmake

CMake Error at tlm/cmake/Modules/FindCouchbaseErlang.cmake:80 (MESSAGE):

Erlang not found - cannot continue building

Call Stack (most recent call first):

tlm/cmake/Modules/CouchbaseSingleModuleBuild.cmake:21 (INCLUDE)

CMakeLists.txt:12 (INCLUDE)

Erlang FTW!

# make -C /usr/ports/lang/erlang install clean

Let's build again.

/root/couchbase/platform/src/cb_time.c:60:2: error: "Don't know how to build cb_get_monotonic_seconds"

#error "Don't know how to build cb_get_monotonic_seconds"

^

1 error generated.

platform/CMakeFiles/platform.dir/build.make:169: recipe for target 'platform/CMakeFiles/platform.dir/src/cb_time.c.o' failed

gmake[4]: *** [platform/CMakeFiles/platform.dir/src/cb_time.c.o] Error 1

CMakeFiles/Makefile2:285: recipe for target 'platform/CMakeFiles/platform.dir/all' failed

gmake[3]: *** [platform/CMakeFiles/platform.dir/all] Error 2

Makefile:126: recipe for target 'all' failed

gmake[2]: *** [all] Error 2

Makefile:36: recipe for target 'compile' failed

gmake[1]: *** [compile] Error 2

GNUmakefile:5: recipe for target 'all' failed

gmake: *** [all] Error 2

At last! A real error. Let's see the code.

# cat platform/src/cb_time.c

/*

return a monotonically increasing value with a seconds frequency.

*/

uint64_t cb_get_monotonic_seconds() {

uint64_t seconds = 0;

#if defined(WIN32)

/* GetTickCound64 gives us near 60years of ticks...*/

seconds = (GetTickCount64() / 1000);

#elif defined(__APPLE__)

uint64_t time = mach_absolute_time();

static mach_timebase_info_data_t timebase;

if (timebase.denom == 0) {

mach_timebase_info(&timebase);

}

seconds = (double)time * timebase.numer / timebase.denom * 1e-9;

#elif defined(__linux__) || defined(__sun)

/* Linux and Solaris can use clock_gettime */

struct timespec tm;

if (clock_gettime(CLOCK_MONOTONIC, &tm) == -1) {

abort();

}

seconds = tm.tv_sec;

#else

#error "Don't know how to build cb_get_monotonic_seconds"

#endif

return seconds;

}

FreeBSD also has

clock_gettime, so let's patch the file:

diff -u platform/src/cb_time.c.orig platform/src/cb_time.c

--- platform/src/cb_time.c.orig 2015-10-07 19:26:14.258513000 +0200

+++ platform/src/cb_time.c 2015-10-07 19:26:29.768324000 +0200

@@ -49,7 +49,7 @@

}

seconds = (double)time * timebase.numer / timebase.denom * 1e-9;

-#elif defined(__linux__) || defined(__sun)

+#elif defined(__linux__) || defined(__sun) || defined(__FreeBSD__)

/* Linux and Solaris can use clock_gettime */

struct timespec tm;

if (clock_gettime(CLOCK_MONOTONIC, &tm) == -1) {

Next error, please.

# gmake

Linking CXX shared library libplatform.so

/usr/bin/ld: cannot find -ldl

CC: error: linker command failed with exit code 1 (use -v to see invocation)

platform/CMakeFiles/platform.dir/build.make:210: recipe for target 'platform/libplatform.so.0.1.0' failed

gmake[4]: *** [platform/libplatform.so.0.1.0] Error 1

Aaah, good old Linux dl library. Let's get rid of that in the Makefile:

diff -u CMakeLists.txt.orig CMakeLists.txt

--- CMakeLists.txt.orig 2015-10-07 19:30:45.546580000 +0200

+++ CMakeLists.txt 2015-10-07 19:36:27.052693000 +0200

@@ -34,7 +34,9 @@

ELSE (WIN32)

SET(PLATFORM_FILES src/cb_pthreads.c src/urandom.c)

SET(THREAD_LIBS "pthread")

- SET(DLOPENLIB "dl")

+ IF(NOT CMAKE_SYSTEM_NAME STREQUAL "FreeBSD")

+ SET(DLOPENLIB "dl")

+ ENDIF(NOT CMAKE_SYSTEM_NAME STREQUAL "FreeBSD")

IF (NOT APPLE)

SET(RTLIB "rt")

Next!

FreeBSD has Dtrace, but not the same as Solaris, so we must disable it.

Someone already did that: see commit Disable DTrace for FreeBSD for the patch:

--- a/cmake/Modules/FindCouchbaseDtrace.cmake

+++ b/cmake/Modules/FindCouchbaseDtrace.cmake

@@ -1,18 +1,19 @@

-# stupid systemtap use a binary named dtrace as well..

+# stupid systemtap use a binary named dtrace as well, but it's not dtrace

+IF (NOT CMAKE_SYSTEM_NAME STREQUAL "Linux")

+ IF (CMAKE_SYSTEM_NAME STREQUAL "FreeBSD")

+ MESSAGE(STATUS "We don't have support for DTrace on FreeBSD")

+ ELSE (CMAKE_SYSTEM_NAME STREQUAL "FreeBSD")

+ FIND_PROGRAM(DTRACE dtrace)

+ IF (DTRACE)

+ SET(ENABLE_DTRACE True CACHE BOOL "Whether DTrace has been found")

+ MESSAGE(STATUS "Found dtrace in ${DTRACE}")

-IF (NOT ${CMAKE_SYSTEM_NAME} STREQUAL "Linux")

+ IF (CMAKE_SYSTEM_NAME MATCHES "SunOS")

+ SET(DTRACE_NEED_INSTUMENT True CACHE BOOL

+ "Whether DTrace should instrument object files")

+ ENDIF (CMAKE_SYSTEM_NAME MATCHES "SunOS")

+ ENDIF (DTRACE)

-FIND_PROGRAM(DTRACE dtrace)

-IF (DTRACE)

- SET(ENABLE_DTRACE True CACHE BOOL "Whether DTrace has been found")

- MESSAGE(STATUS "Found dtrace in ${DTRACE}")

-

- IF (CMAKE_SYSTEM_NAME MATCHES "SunOS")

- SET(DTRACE_NEED_INSTUMENT True CACHE BOOL

- "Whether DTrace should instrument object files")

- ENDIF (CMAKE_SYSTEM_NAME MATCHES "SunOS")

-ENDIF (DTRACE)

-

-MARK_AS_ADVANCED(DTRACE_NEED_INSTUMENT ENABLE_DTRACE DTRACE)

-

-ENDIF (NOT ${CMAKE_SYSTEM_NAME} STREQUAL "Linux")

+ MARK_AS_ADVANCED(DTRACE_NEED_INSTUMENT ENABLE_DTRACE DTRACE)

+ ENDIF (CMAKE_SYSTEM_NAME STREQUAL "FreeBSD")

+ENDIF (NOT CMAKE_SYSTEM_NAME STREQUAL "Linux")

Next!

# gmake

Linking C executable couch_compact

libcouchstore.so: undefined reference to `fdatasync'

cc: error: linker command failed with exit code 1 (use -v to see invocation)

couchstore/CMakeFiles/couch_compact.dir/build.make:89: recipe for target 'couchstore/couch_compact' failed

gmake[4]: *** [couchstore/couch_compact] Error 1

CMakeFiles/Makefile2:1969: recipe for target 'couchstore/CMakeFiles/couch_compact.dir/all' failed

FreeBSD does not have

fdatasync. Instead, we should use fsync:

diff -u couchstore/config.cmake.h.in.orig couchstore/config.cmake.h.in

--- couchstore/config.cmake.h.in.orig 2015-10-07 19:56:05.461932000 +0200

+++ couchstore/config.cmake.h.in 2015-10-07 19:56:42.973040000 +0200

@@ -38,10 +38,10 @@

#include <unistd.h>

#endif

-#ifdef __APPLE__

-/* autoconf things OS X has fdatasync but it doesn't */

+#if defined(__APPLE__) || defined(__FreeBSD__)

+/* autoconf things OS X and FreeBSD have fdatasync but they don't */

#define fdatasync(FD) fsync(FD)

-#endif /* __APPLE__ */

+#endif /* __APPLE__ || __FreeBSD__ */

#include <platform/platform.h>

Next!

[ 56%] Building C object sigar/build-src/CMakeFiles/sigar.dir/sigar.c.o

/root/couchbase/sigar/src/sigar.c:1071:12: fatal error: 'utmp.h' file not found

# include <utmp.h>

^

1 error generated.

sigar/build-src/CMakeFiles/sigar.dir/build.make:77: recipe for target 'sigar/build-src/CMakeFiles/sigar.dir/sigar.c.o' failed

gmake[4]: *** [sigar/build-src/CMakeFiles/sigar.dir/sigar.c.o] Error 1

CMakeFiles/Makefile2:4148: recipe for target 'sigar/build-src/CMakeFiles/sigar.dir/all' failed

I was planning to port that file to utmpx, and then I wondered how the freebsd port of the library (java/sigar) was working. Then I found the patch has already been done:

Commit "Make utmp-handling more standards-compliant. " on Github -> amishHammer -> sigar (https://github.com/amishHammer/sigar/commit/67b476efe0f2a7c644f3966b79f5e358f67752e9)

diff --git a/src/sigar.c b/src/sigar.c

index 8bd7e91..7f76dfd 100644

--- a/src/sigar.c

+++ b/src/sigar.c

@@ -30,6 +30,11 @@

#ifndef WIN32

#include <arpa/inet.h>

#endif

+#if defined(HAVE_UTMPX_H)

+# include <utmpx.h>

+#elif defined(HAVE_UTMP_H)

+# include <utmp.h>

+#endif

#include "sigar.h"

#include "sigar_private.h"

@@ -1024,40 +1029,7 @@ SIGAR_DECLARE(int) sigar_who_list_destroy(sigar_t *sigar,

return SIGAR_OK;

}

-#ifdef DARWIN

-#include <AvailabilityMacros.h>

-#endif

-#ifdef MAC_OS_X_VERSION_10_5

-# if MAC_OS_X_VERSION_MIN_REQUIRED >= MAC_OS_X_VERSION_10_5

-# define SIGAR_NO_UTMP

-# endif

-/* else 10.4 and earlier or compiled with -mmacosx-version-min=10.3 */

-#endif

-

-#if defined(__sun)

-# include <utmpx.h>

-# define SIGAR_UTMP_FILE _UTMPX_FILE

-# define ut_time ut_tv.tv_sec

-#elif defined(WIN32)

-/* XXX may not be the default */

-#define SIGAR_UTMP_FILE "C:\\cygwin\\var\\run\\utmp"

-#define UT_LINESIZE 16

-#define UT_NAMESIZE 16

-#define UT_HOSTSIZE 256

-#define UT_IDLEN 2

-#define ut_name ut_user

-

-struct utmp {

- short ut_type;

- int ut_pid;

- char ut_line[UT_LINESIZE];

- char ut_id[UT_IDLEN];

- time_t ut_time;

- char ut_user[UT_NAMESIZE];

- char ut_host[UT_HOSTSIZE];

- long ut_addr;

-};

-#elif defined(NETWARE)

+#if defined(NETWARE)

static char *getpass(const char *prompt)

{

static char password[BUFSIZ];

@@ -1067,109 +1039,48 @@ static char *getpass(const char *prompt)

return (char *)&password;

}

-#elif !defined(SIGAR_NO_UTMP)

-# include <utmp.h>

-# ifdef UTMP_FILE

-# define SIGAR_UTMP_FILE UTMP_FILE

-# else

-# define SIGAR_UTMP_FILE _PATH_UTMP

-# endif

-#endif

-

-#if defined(__FreeBSD__) || defined(__OpenBSD__) || defined(__NetBSD__) || defined(DARWIN)

-# define ut_user ut_name

#endif

-#ifdef DARWIN

-/* XXX from utmpx.h; sizeof changed in 10.5 */

-/* additionally, utmpx does not work on 10.4 */

-#define SIGAR_HAS_UTMPX

-#define _PATH_UTMPX "/var/run/utmpx"

-#define _UTX_USERSIZE 256 /* matches MAXLOGNAME */

-#define _UTX_LINESIZE 32

-#define _UTX_IDSIZE 4

-#define _UTX_HOSTSIZE 256

-struct utmpx {

- char ut_user[_UTX_USERSIZE]; /* login name */

- char ut_id[_UTX_IDSIZE]; /* id */

- char ut_line[_UTX_LINESIZE]; /* tty name */

- pid_t ut_pid; /* process id creating the entry */

- short ut_type; /* type of this entry */

- struct timeval ut_tv; /* time entry was created */

- char ut_host[_UTX_HOSTSIZE]; /* host name */

- __uint32_t ut_pad[16]; /* reserved for future use */

-};

-#define ut_xtime ut_tv.tv_sec

-#define UTMPX_USER_PROCESS 7

-/* end utmpx.h */

-#define SIGAR_UTMPX_FILE _PATH_UTMPX

-#endif

-

-#if !defined(NETWARE) && !defined(_AIX)

-

#define WHOCPY(dest, src) \

SIGAR_SSTRCPY(dest, src); \

if (sizeof(src) < sizeof(dest)) \

dest[sizeof(src)] = '\0'

-#ifdef SIGAR_HAS_UTMPX

-static int sigar_who_utmpx(sigar_t *sigar,

- sigar_who_list_t *wholist)

+static int sigar_who_utmp(sigar_t *sigar,

+ sigar_who_list_t *wholist)

{

- FILE *fp;

- struct utmpx ut;

+#if defined(HAVE_UTMPX_H)

+ struct utmpx *ut;

- if (!(fp = fopen(SIGAR_UTMPX_FILE, "r"))) {

- return errno;

- }

+ setutxent();

- while (fread(&ut, sizeof(ut), 1, fp) == 1) {

+ while ((ut = getutxent()) != NULL) {

sigar_who_t *who;

- if (*ut.ut_user == '\0') {

+ if (*ut->ut_user == '\0') {

continue;

}

-#ifdef UTMPX_USER_PROCESS

- if (ut.ut_type != UTMPX_USER_PROCESS) {

+ if (ut->ut_type != USER_PROCESS) {

continue;

}

-#endif

SIGAR_WHO_LIST_GROW(wholist);

who = &wholist->data[wholist->number++];

- WHOCPY(who->user, ut.ut_user);

- WHOCPY(who->device, ut.ut_line);

- WHOCPY(who->host, ut.ut_host);

+ WHOCPY(who->user, ut->ut_user);

+ WHOCPY(who->device, ut->ut_line);

+ WHOCPY(who->host, ut->ut_host);

- who->time = ut.ut_xtime;

+ who->time = ut->ut_tv.tv_sec;

}

- fclose(fp);

-

- return SIGAR_OK;

-}

-#endif

-

-#if defined(SIGAR_NO_UTMP) && defined(SIGAR_HAS_UTMPX)

-#define sigar_who_utmp sigar_who_utmpx

-#else

-static int sigar_who_utmp(sigar_t *sigar,

- sigar_who_list_t *wholist)

-{

+ endutxent();

+#elif defined(HAVE_UTMP_H)

FILE *fp;

-#ifdef __sun

- /* use futmpx w/ pid32_t for sparc64 */

- struct futmpx ut;

-#else

struct utmp ut;

-#endif

- if (!(fp = fopen(SIGAR_UTMP_FILE, "r"))) {

-#ifdef SIGAR_HAS_UTMPX

- /* Darwin 10.5 */

- return sigar_who_utmpx(sigar, wholist);

-#endif

+

+ if (!(fp = fopen(_PATH_UTMP, "r"))) {

return errno;

}

@@ -1189,7 +1100,7 @@ static int sigar_who_utmp(sigar_t *sigar,

SIGAR_WHO_LIST_GROW(wholist);

who = &wholist->data[wholist->number++];

- WHOCPY(who->user, ut.ut_user);

+ WHOCPY(who->user, ut.ut_name);

WHOCPY(who->device, ut.ut_line);

WHOCPY(who->host, ut.ut_host);

@@ -1197,11 +1108,10 @@ static int sigar_who_utmp(sigar_t *sigar,

}

fclose(fp);

+#endif

return SIGAR_OK;

}

-#endif /* SIGAR_NO_UTMP */

-#endif /* NETWARE */

#if defined(WIN32)

Next!

# gmake

[ 75%] Generating couch_btree.beam

compile: warnings being treated as errors

/root/couchbase/couchdb/src/couchdb/couch_btree.erl:415: variable 'NodeList' exported from 'case' (line 391)

/root/couchbase/couchdb/src/couchdb/couch_btree.erl:1010: variable 'NodeList' exported from 'case' (line 992)

couchdb/src/couchdb/CMakeFiles/couchdb.dir/build.make:151: recipe for target 'couchdb/src/couchdb/couch_btree.beam' failed

gmake[4]: *** [couchdb/src/couchdb/couch_btree.beam] Error 1

CMakeFiles/Makefile2:5531: recipe for target 'couchdb/src/couchdb/CMakeFiles/couchdb.dir/all' failed

Fortunately, I'm fluent in Erlang.

I'm not sure why compiler option

+warn_export_vars was set if the code does contains such errors. Let's fix them.

diff -u /root/couchbase/couchdb/src/couchdb/couch_btree.erl /root/couchbase/couchdb/src/couchdb/couch_btree.erl.orig

--- /root/couchbase/couchdb/src/couchdb/couch_btree.erl 2015-10-07 22:01:05.191344000 +0200

+++ /root/couchbase/couchdb/src/couchdb/couch_btree.erl.orig 2015-10-07 21:59:43.359322000 +0200

@@ -388,12 +388,13 @@

end.

modify_node(Bt, RootPointerInfo, Actions, QueryOutput, Acc, PurgeFun, PurgeFunAcc, KeepPurging) ->

- {NodeType, NodeList} = case RootPointerInfo of

+ case RootPointerInfo of

nil ->

- {kv_node, []};

+ NodeType = kv_node,

+ NodeList = [];

_Tuple ->

Pointer = element(1, RootPointerInfo),

- get_node(Bt, Pointer)

+ {NodeType, NodeList} = get_node(Bt, Pointer)

end,

case NodeType of

@@ -988,12 +989,13 @@

guided_purge(Bt, NodeState, GuideFun, GuideAcc) ->

% inspired by modify_node/5

- {NodeType, NodeList} = case NodeState of

+ case NodeState of

nil ->

- {kv_node, []};

+ NodeType = kv_node,

+ NodeList = [];

_Tuple ->

Pointer = element(1, NodeState),

- get_node(Bt, Pointer)

+ {NodeType, NodeList} = get_node(Bt, Pointer)

end,

{ok, NewNodeList, GuideAcc2, Bt2, Go} =

case NodeType of

diff -u /root/couchbase/couchdb/src/couchdb/couch_compaction_daemon.erl.orig /root/couchbase/couchdb/src/couchdb/couch_compaction_daemon.erl

--- /root/couchbase/couchdb/src/couchdb/couch_compaction_daemon.erl.orig 2015-10-07 22:01:48.495966000 +0200

+++ /root/couchbase/couchdb/src/couchdb/couch_compaction_daemon.erl 2015-10-07 22:02:15.620989000 +0200

@@ -142,14 +142,14 @@

true ->

{ok, DbCompactPid} = couch_db:start_compact(Db),

TimeLeft = compact_time_left(Config),

- case Config#config.parallel_view_compact of

+ ViewsMonRef = case Config#config.parallel_view_compact of

true ->

ViewsCompactPid = spawn_link(fun() ->

maybe_compact_views(DbName, DDocNames, Config)

end),

- ViewsMonRef = erlang:monitor(process, ViewsCompactPid);

+ erlang:monitor(process, ViewsCompactPid);

false ->

- ViewsMonRef = nil

+ nil

end,

DbMonRef = erlang:monitor(process, DbCompactPid),

receive

Next!

[ 84%] Generating ebin/couch_set_view_group.beam

/root/couchbase/couchdb/src/couch_set_view/src/couch_set_view_group.erl:3178: type dict() undefined

couchdb/src/couch_set_view/CMakeFiles/couch_set_view.dir/build.make:87: recipe for target 'couchdb/src/couch_set_view/ebin/couch_set_view_group.beam' failed

gmake[4]: *** [couchdb/src/couch_set_view/ebin/couch_set_view_group.beam] Error 1

CMakeFiles/Makefile2:5720: recipe for target 'couchdb/src/couch_set_view/CMakeFiles/couch_set_view.dir/all' failed

gmake[3]: *** [couchdb/src/couch_set_view/CMakeFiles/couch_set_view.dir/all] Error 2

Those happen since I'm building the project with Erlang 18. I guess I wouldn't have had them with version 17.

Anyway, let's fix them.

--- couchdb.orig/src/couch_dcp/src/couch_dcp_client.erl 2015-10-08 11:26:37.034138000 +0200

+++ couchdb/src/couch_dcp/src/couch_dcp_client.erl 2015-10-07 22:07:35.556126000 +0200

@@ -47,13 +47,13 @@

bufsocket = nil :: #bufsocket{} | nil,

timeout = 5000 :: timeout(),

request_id = 0 :: request_id(),

- pending_requests = dict:new() :: dict(),

- stream_queues = dict:new() :: dict(),

+ pending_requests = dict:new() :: dict:dict(),

+ stream_queues = dict:new() :: dict:dict(),

active_streams = [] :: list(),

worker_pid :: pid(),

max_buffer_size = ?MAX_BUF_SIZE :: integer(),

total_buffer_size = 0 :: non_neg_integer(),

- stream_info = dict:new() :: dict(),

+ stream_info = dict:new() :: dict:dict(),

args = [] :: list()

}).

@@ -1378,7 +1378,7 @@

{error, Error}

end.

--spec get_queue_size(queue(), non_neg_integer()) -> non_neg_integer().

+-spec get_queue_size(queue:queue(), non_neg_integer()) -> non_neg_integer().

get_queue_size(EvQueue, Size) ->

case queue:out(EvQueue) of

{empty, _} ->

diff -r -u couchdb.orig/src/couch_set_view/src/couch_set_view_group.erl couchdb/src/couch_set_view/src/couch_set_view_group.erl

--- couchdb.orig/src/couch_set_view/src/couch_set_view_group.erl 2015-10-08 11:26:37.038856000 +0200

+++ couchdb/src/couch_set_view/src/couch_set_view_group.erl 2015-10-07 22:04:53.198951000 +0200

@@ -118,7 +118,7 @@

auto_transfer_replicas = true :: boolean(),

replica_partitions = [] :: ordsets:ordset(partition_id()),

pending_transition_waiters = [] :: [{From::{pid(), reference()}, #set_view_group_req{}}],

- update_listeners = dict:new() :: dict(),

+ update_listeners = dict:new() :: dict:dict(),

compact_log_files = nil :: 'nil' | {[[string()]], partition_seqs(), partition_versions()},

timeout = ?DEFAULT_TIMEOUT :: non_neg_integer() | 'infinity'

}).

@@ -3136,7 +3136,7 @@

}.

--spec notify_update_listeners(#state{}, dict(), #set_view_group{}) -> dict().

+-spec notify_update_listeners(#state{}, dict:dict(), #set_view_group{}) -> dict:dict().

notify_update_listeners(State, Listeners, NewGroup) ->

case dict:size(Listeners) == 0 of

true ->

@@ -3175,7 +3175,7 @@

end.

--spec error_notify_update_listeners(#state{}, dict(), monitor_error()) -> dict().

+-spec error_notify_update_listeners(#state{}, dict:dict(), monitor_error()) -> dict:dict().

error_notify_update_listeners(State, Listeners, Error) ->

_ = dict:fold(

fun(Ref, #up_listener{pid = ListPid, partition = PartId}, _Acc) ->

diff -r -u couchdb.orig/src/couch_set_view/src/mapreduce_view.erl couchdb/src/couch_set_view/src/mapreduce_view.erl

--- couchdb.orig/src/couch_set_view/src/mapreduce_view.erl 2015-10-08 11:26:37.040295000 +0200

+++ couchdb/src/couch_set_view/src/mapreduce_view.erl 2015-10-07 22:05:56.157242000 +0200

@@ -109,7 +109,7 @@

convert_primary_index_kvs_to_binary(Rest, Group, [{KeyBin, V} | Acc]).

--spec finish_build(#set_view_group{}, dict(), string()) ->

+-spec finish_build(#set_view_group{}, dict:dict(), string()) ->

{#set_view_group{}, pid()}.

finish_build(Group, TmpFiles, TmpDir) ->

#set_view_group{

diff -r -u couchdb.orig/src/couchdb/couch_btree.erl couchdb/src/couchdb/couch_btree.erl

--- couchdb.orig/src/couchdb/couch_btree.erl 2015-10-08 11:26:37.049320000 +0200

+++ couchdb/src/couchdb/couch_btree.erl 2015-10-07 22:01:05.191344000 +0200

@@ -388,13 +388,12 @@

end.

modify_node(Bt, RootPointerInfo, Actions, QueryOutput, Acc, PurgeFun, PurgeFunAcc, KeepPurging) ->

- case RootPointerInfo of

+ {NodeType, NodeList} = case RootPointerInfo of

nil ->

- NodeType = kv_node,

- NodeList = [];

+ {kv_node, []};

_Tuple ->

Pointer = element(1, RootPointerInfo),

- {NodeType, NodeList} = get_node(Bt, Pointer)

+ get_node(Bt, Pointer)

end,

case NodeType of

@@ -989,13 +988,12 @@

guided_purge(Bt, NodeState, GuideFun, GuideAcc) ->

% inspired by modify_node/5

- case NodeState of

+ {NodeType, NodeList} = case NodeState of

nil ->

- NodeType = kv_node,

- NodeList = [];

+ {kv_node, []};

_Tuple ->

Pointer = element(1, NodeState),

- {NodeType, NodeList} = get_node(Bt, Pointer)

+ get_node(Bt, Pointer)

end,

{ok, NewNodeList, GuideAcc2, Bt2, Go} =

case NodeType of

diff -r -u couchdb.orig/src/couchdb/couch_compaction_daemon.erl couchdb/src/couchdb/couch_compaction_daemon.erl

--- couchdb.orig/src/couchdb/couch_compaction_daemon.erl 2015-10-08 11:26:37.049734000 +0200

+++ couchdb/src/couchdb/couch_compaction_daemon.erl 2015-10-07 22:02:15.620989000 +0200

@@ -142,14 +142,14 @@

true ->

{ok, DbCompactPid} = couch_db:start_compact(Db),

TimeLeft = compact_time_left(Config),

- case Config#config.parallel_view_compact of

+ ViewsMonRef = case Config#config.parallel_view_compact of

true ->

ViewsCompactPid = spawn_link(fun() ->

maybe_compact_views(DbName, DDocNames, Config)

end),

- ViewsMonRef = erlang:monitor(process, ViewsCompactPid);

+ erlang:monitor(process, ViewsCompactPid);

false ->

- ViewsMonRef = nil

+ nil

end,

DbMonRef = erlang:monitor(process, DbCompactPid),

receive

[ 98%] Generating ebin/vtree_cleanup.beam

compile: warnings being treated as errors

/root/couchbase/geocouch/vtree/src/vtree_cleanup.erl:32: erlang:now/0: Deprecated BIF. See the "Time and Time Correction in Erlang" chapter of the ERTS User's Guide for more information.

/root/couchbase/geocouch/vtree/src/vtree_cleanup.erl:42: erlang:now/0: Deprecated BIF. See the "Time and Time Correction in Erlang" chapter of the ERTS User's Guide for more information.

../geocouch/build/vtree/CMakeFiles/vtree.dir/build.make:64: recipe for target '../geocouch/build/vtree/ebin/vtree_cleanup.beam' failed

gmake[4]: *** [../geocouch/build/vtree/ebin/vtree_cleanup.beam] Error 1

CMakeFiles/Makefile2:6702: recipe for target '../geocouch/build/vtree/CMakeFiles/vtree.dir/all' failed

gmake[3]: *** [../geocouch/build/vtree/CMakeFiles/vtree.dir/all] Error 2

diff -r -u geocouch.orig/gc-couchbase/src/spatial_view.erl geocouch/gc-couchbase/src/spatial_view.erl

--- geocouch.orig/gc-couchbase/src/spatial_view.erl 2015-10-08 11:29:05.323361000 +0200

+++ geocouch/gc-couchbase/src/spatial_view.erl 2015-10-07 22:17:09.741790000 +0200

@@ -166,7 +166,7 @@

% Build the tree out of the sorted files

--spec finish_build(#set_view_group{}, dict(), string()) ->

+-spec finish_build(#set_view_group{}, dict:dict(), string()) ->

{#set_view_group{}, pid()}.

finish_build(Group, TmpFiles, TmpDir) ->

#set_view_group{

diff -r -u geocouch.orig/vtree/src/vtree_cleanup.erl geocouch/vtree/src/vtree_cleanup.erl

--- geocouch.orig/vtree/src/vtree_cleanup.erl 2015-10-08 11:29:05.327423000 +0200

+++ geocouch/vtree/src/vtree_cleanup.erl 2015-10-07 22:12:26.915600000 +0200

@@ -29,7 +29,7 @@

cleanup(#vtree{root=nil}=Vt, _Nodes) ->

Vt;

cleanup(Vt, Nodes) ->

- T1 = now(),

+ T1 = erlang:monotonic_time(seconds),

Root = Vt#vtree.root,

PartitionedNodes = [Nodes],

KpNodes = cleanup_multiple(Vt, PartitionedNodes, [Root]),

@@ -39,7 +39,7 @@

vtree_modify:write_new_root(Vt, KpNodes)

end,

?LOG_DEBUG("Cleanup took: ~ps~n",

- [timer:now_diff(now(), T1)/1000000]),

+ [erlang:monotonic_time(seconds) - T1]),

Vt#vtree{root=NewRoot}.

-spec cleanup_multiple(Vt :: #vtree{}, ToCleanup :: [#kv_node{}],

diff -r -u geocouch.orig/vtree/src/vtree_delete.erl geocouch/vtree/src/vtree_delete.erl

--- geocouch.orig/vtree/src/vtree_delete.erl 2015-10-08 11:29:05.327537000 +0200

+++ geocouch/vtree/src/vtree_delete.erl 2015-10-07 22:13:51.733064000 +0200

@@ -30,7 +30,7 @@

delete(#vtree{root=nil}=Vt, _Nodes) ->

Vt;

delete(Vt, Nodes) ->

- T1 = now(),

+ T1 = erlang:monotonic_time(seconds),

Root = Vt#vtree.root,

PartitionedNodes = [Nodes],

KpNodes = delete_multiple(Vt, PartitionedNodes, [Root]),

@@ -40,7 +40,7 @@

vtree_modify:write_new_root(Vt, KpNodes)

end,

?LOG_DEBUG("Deletion took: ~ps~n",

- [timer:now_diff(now(), T1)/1000000]),

+ [erlang:monotonic_time(seconds) - T1]),

Vt#vtree{root=NewRoot}.

diff -r -u geocouch.orig/vtree/src/vtree_insert.erl geocouch/vtree/src/vtree_insert.erl

--- geocouch.orig/vtree/src/vtree_insert.erl 2015-10-08 11:29:05.327648000 +0200

+++ geocouch/vtree/src/vtree_insert.erl 2015-10-07 22:15:50.812447000 +0200

@@ -26,7 +26,7 @@

insert(Vt, []) ->

Vt;

insert(#vtree{root=nil}=Vt, Nodes) ->

- T1 = now(),

+ T1 = erlang:monotonic_time(seconds),

% If we would do single inserts, the first node that was inserted would

% have set the original Mbb `MbbO`

MbbO = (hd(Nodes))#kv_node.key,

@@ -48,7 +48,7 @@

ArbitraryBulkSize = round(math:log(Threshold)+50),

Vt3 = insert_in_bulks(Vt2, Rest, ArbitraryBulkSize),

?LOG_DEBUG("Insertion into empty tree took: ~ps~n",

- [timer:now_diff(now(), T1)/1000000]),

+ [erlang:monotonic_time(seconds) - T1]),

?LOG_DEBUG("Root pos: ~p~n", [(Vt3#vtree.root)#kp_node.childpointer]),

Vt3;

false ->

@@ -56,13 +56,13 @@

Vt#vtree{root=Root}

end;

insert(Vt, Nodes) ->

- T1 = now(),

+ T1 = erlang:monotonic_time(seconds),

Root = Vt#vtree.root,

PartitionedNodes = [Nodes],

KpNodes = insert_multiple(Vt, PartitionedNodes, [Root]),

NewRoot = vtree_modify:write_new_root(Vt, KpNodes),

?LOG_DEBUG("Insertion into existing tree took: ~ps~n",

- [timer:now_diff(now(), T1)/1000000]),

+ [erlang:monotonic_time(seconds) - T1]),

Vt#vtree{root=NewRoot}.

diff -u ns_server/deps/ale/src/ale.erl.orig ns_server/deps/ale/src/ale.erl

--- ns_server/deps/ale/src/ale.erl.orig 2015-10-07 22:19:28.730212000 +0200

+++ ns_server/deps/ale/src/ale.erl 2015-10-07 22:20:09.788761000 +0200

@@ -45,12 +45,12 @@

-include("ale.hrl").

--record(state, {sinks :: dict(),

- loggers :: dict()}).

+-record(state, {sinks :: dict:dict(),

+ loggers :: dict:dict()}).

-record(logger, {name :: atom(),

loglevel :: loglevel(),

- sinks :: dict(),

+ sinks :: dict:dict(),

formatter :: module()}).

-record(sink, {name :: atom(),

==> ns_babysitter (compile)

src/ns_crash_log.erl:18: type queue() undefined

../ns_server/build/deps/ns_babysitter/CMakeFiles/ns_babysitter.dir/build.make:49: recipe for target '../ns_server/build/deps/ns_babysitter/CMakeFiles/ns_babysitter' failed

gmake[4]: *** [../ns_server/build/deps/ns_babysitter/CMakeFiles/ns_babysitter] Error 1

CMakeFiles/Makefile2:7484: recipe for target '../ns_server/build/deps/ns_babysitter/CMakeFiles/ns_babysitter.dir/all' failed

gmake[3]: *** [../ns_server/build/deps/ns_babysitter/CMakeFiles/ns_babysitter.dir/all] Error 2

diff -r -u ns_server.orig/deps/ale/src/ale.erl ns_server/deps/ale/src/ale.erl

--- ns_server.orig/deps/ale/src/ale.erl 2015-10-08 11:31:20.520281000 +0200

+++ ns_server/deps/ale/src/ale.erl 2015-10-07 22:20:09.788761000 +0200

@@ -45,12 +45,12 @@

-include("ale.hrl").

--record(state, {sinks :: dict(),

- loggers :: dict()}).

+-record(state, {sinks :: dict:dict(),

+ loggers :: dict:dict()}).

-record(logger, {name :: atom(),

loglevel :: loglevel(),

- sinks :: dict(),

+ sinks :: dict:dict(),

formatter :: module()}).

-record(sink, {name :: atom(),

diff -r -u ns_server.orig/deps/ns_babysitter/src/ns_crash_log.erl ns_server/deps/ns_babysitter/src/ns_crash_log.erl

--- ns_server.orig/deps/ns_babysitter/src/ns_crash_log.erl 2015-10-08 11:31:20.540433000 +0200

+++ ns_server/deps/ns_babysitter/src/ns_crash_log.erl 2015-10-07 22:21:45.292975000 +0200

@@ -13,9 +13,9 @@

-define(MAX_CRASHES_LEN, 100).

-record(state, {file_path :: file:filename(),

- crashes :: queue(),

+ crashes :: queue:queue(),

crashes_len :: non_neg_integer(),

- crashes_saved :: queue(),

+ crashes_saved :: queue:queue(),

consumer_from = undefined :: undefined | {pid(), reference()},

consumer_mref = undefined :: undefined | reference()

}).

diff -r -u ns_server.orig/include/remote_clusters_info.hrl ns_server/include/remote_clusters_info.hrl

--- ns_server.orig/include/remote_clusters_info.hrl 2015-10-08 11:31:20.544760000 +0200

+++ ns_server/include/remote_clusters_info.hrl 2015-10-07 22:22:48.541494000 +0200

@@ -20,6 +20,6 @@

cluster_cert :: binary() | undefined,

server_list_nodes :: [#remote_node{}],

bucket_caps :: [binary()],

- raw_vbucket_map :: dict(),

- capi_vbucket_map :: dict(),

+ raw_vbucket_map :: dict:dict(),

+ capi_vbucket_map :: dict:dict(),

cluster_version :: {integer(), integer()}}).

diff -r -u ns_server.orig/src/auto_failover.erl ns_server/src/auto_failover.erl

--- ns_server.orig/src/auto_failover.erl 2015-10-08 11:31:21.396519000 +0200

+++ ns_server/src/auto_failover.erl 2015-10-08 11:19:43.710301000 +0200

@@ -336,7 +336,7 @@

%%

%% @doc Returns a list of nodes that should be active, but are not running.

--spec actual_down_nodes(dict(), [atom()], [{atom(), term()}]) -> [atom()].

+-spec actual_down_nodes(dict:dict(), [atom()], [{atom(), term()}]) -> [atom()].

actual_down_nodes(NodesDict, NonPendingNodes, Config) ->

% Get all buckets

BucketConfigs = ns_bucket:get_buckets(Config),

diff -r -u ns_server.orig/src/dcp_upgrade.erl ns_server/src/dcp_upgrade.erl

--- ns_server.orig/src/dcp_upgrade.erl 2015-10-08 11:31:21.400562000 +0200

+++ ns_server/src/dcp_upgrade.erl 2015-10-08 11:19:47.370353000 +0200

@@ -37,7 +37,7 @@

num_buckets :: non_neg_integer(),

bucket :: bucket_name(),

bucket_config :: term(),

- progress :: dict(),

+ progress :: dict:dict(),

workers :: [pid()]}).

start_link(Buckets) ->

diff -r -u ns_server.orig/src/janitor_agent.erl ns_server/src/janitor_agent.erl

--- ns_server.orig/src/janitor_agent.erl 2015-10-08 11:31:21.401859000 +0200

+++ ns_server/src/janitor_agent.erl 2015-10-08 11:18:09.979728000 +0200

@@ -43,7 +43,7 @@

rebalance_status = finished :: in_process | finished,

replicators_primed :: boolean(),

- apply_vbucket_states_queue :: queue(),

+ apply_vbucket_states_queue :: queue:queue(),

apply_vbucket_states_worker :: undefined | pid(),

rebalance_subprocesses_registry :: pid()}).

diff -r -u ns_server.orig/src/menelaus_web_alerts_srv.erl ns_server/src/menelaus_web_alerts_srv.erl

--- ns_server.orig/src/menelaus_web_alerts_srv.erl 2015-10-08 11:31:21.405690000 +0200

+++ ns_server/src/menelaus_web_alerts_srv.erl 2015-10-08 10:58:15.641331000 +0200

@@ -219,7 +219,7 @@

%% @doc if listening on a non localhost ip, detect differences between

%% external listening host and current node host

--spec check(atom(), dict(), list(), [{atom(),number()}]) -> dict().

+-spec check(atom(), dict:dict(), list(), [{atom(),number()}]) -> dict:dict().

check(ip, Opaque, _History, _Stats) ->

{_Name, Host} = misc:node_name_host(node()),

case can_listen(Host) of

@@ -290,7 +290,7 @@

%% @doc only check for disk usage if there has been no previous

%% errors or last error was over the timeout ago

--spec hit_rate_limit(atom(), dict()) -> true | false.

+-spec hit_rate_limit(atom(), dict:dict()) -> true | false.

hit_rate_limit(Key, Dict) ->

case dict:find(Key, Dict) of

error ->

@@ -355,7 +355,7 @@

%% @doc list of buckets thats measured stats have increased

--spec stat_increased(dict(), dict()) -> list().

+-spec stat_increased(dict:dict(), dict:dict()) -> list().

stat_increased(New, Old) ->

[Bucket || {Bucket, Val} <- dict:to_list(New), increased(Bucket, Val, Old)].

@@ -392,7 +392,7 @@

%% @doc Lookup old value and test for increase

--spec increased(string(), integer(), dict()) -> true | false.

+-spec increased(string(), integer(), dict:dict()) -> true | false.

increased(Key, Val, Dict) ->

case dict:find(Key, Dict) of

error ->

diff -r -u ns_server.orig/src/misc.erl ns_server/src/misc.erl

--- ns_server.orig/src/misc.erl 2015-10-08 11:31:21.407175000 +0200

+++ ns_server/src/misc.erl 2015-10-08 10:55:15.167246000 +0200

@@ -54,7 +54,7 @@

randomize() ->

case get(random_seed) of

undefined ->

- random:seed(erlang:now());

+ random:seed(erlang:timestamp());

_ ->

ok

end.

@@ -303,8 +303,8 @@

position(E, [_|List], N) -> position(E, List, N+1).

-now_int() -> time_to_epoch_int(now()).

-now_float() -> time_to_epoch_float(now()).

+now_int() -> time_to_epoch_int(erlang:timestamp()).

+now_float() -> time_to_epoch_float(erlang:timestamp()).

time_to_epoch_int(Time) when is_integer(Time) or is_float(Time) ->

Time;

@@ -1239,7 +1239,7 @@

%% Get an item from from a dict, if it doesnt exist return default

--spec dict_get(term(), dict(), term()) -> term().

+-spec dict_get(term(), dict:dict(), term()) -> term().

dict_get(Key, Dict, Default) ->

case dict:is_key(Key, Dict) of

true -> dict:fetch(Key, Dict);

diff -r -u ns_server.orig/src/ns_doctor.erl ns_server/src/ns_doctor.erl

--- ns_server.orig/src/ns_doctor.erl 2015-10-08 11:31:21.410269000 +0200

+++ ns_server/src/ns_doctor.erl 2015-10-08 10:53:49.208657000 +0200

@@ -30,8 +30,8 @@

get_tasks_version/0, build_tasks_list/2]).

-record(state, {

- nodes :: dict(),

- tasks_hash_nodes :: undefined | dict(),

+ nodes :: dict:dict(),

+ tasks_hash_nodes :: undefined | dict:dict(),

tasks_hash :: undefined | integer(),

tasks_version :: undefined | string()

}).

@@ -112,14 +112,14 @@

RV = case dict:find(Node, Nodes) of

{ok, Status} ->

LiveNodes = [node() | nodes()],

- annotate_status(Node, Status, now(), LiveNodes);

+ annotate_status(Node, Status, erlang:timestamp(), LiveNodes);

_ ->

[]

end,

{reply, RV, State};

handle_call(get_nodes, _From, #state{nodes=Nodes} = State) ->

- Now = erlang:now(),

+ Now = erlang:timestamp(),

LiveNodes = [node()|nodes()],

Nodes1 = dict:map(

fun (Node, Status) ->

@@ -210,7 +210,7 @@

orelse OldReadyBuckets =/= NewReadyBuckets.

update_status(Name, Status0, Dict) ->

- Status = [{last_heard, erlang:now()} | Status0],

+ Status = [{last_heard, erlang:timestamp()} | Status0],

PrevStatus = case dict:find(Name, Dict) of

{ok, V} -> V;

error -> []

diff -r -u ns_server.orig/src/ns_janitor_map_recoverer.erl ns_server/src/ns_janitor_map_recoverer.erl

--- ns_server.orig/src/ns_janitor_map_recoverer.erl 2015-10-08 11:31:21.410945000 +0200

+++ ns_server/src/ns_janitor_map_recoverer.erl 2015-10-08 10:52:23.927033000 +0200

@@ -79,7 +79,7 @@

end.

-spec recover_map([{non_neg_integer(), node()}],

- dict(),

+ dict:dict(),

boolean(),

non_neg_integer(),

pos_integer(),

diff -r -u ns_server.orig/src/ns_memcached.erl ns_server/src/ns_memcached.erl

--- ns_server.orig/src/ns_memcached.erl 2015-10-08 11:31:21.411920000 +0200

+++ ns_server/src/ns_memcached.erl 2015-10-08 10:51:08.281320000 +0200

@@ -65,9 +65,9 @@

running_very_heavy = 0,

%% NOTE: otherwise dialyzer seemingly thinks it's possible

%% for queue fields to be undefined

- fast_calls_queue = impossible :: queue(),

- heavy_calls_queue = impossible :: queue(),

- very_heavy_calls_queue = impossible :: queue(),

+ fast_calls_queue = impossible :: queue:queue(),

+ heavy_calls_queue = impossible :: queue:queue(),

+ very_heavy_calls_queue = impossible :: queue:queue(),

status :: connecting | init | connected | warmed,

start_time::tuple(),

bucket::nonempty_string(),

diff -r -u ns_server.orig/src/ns_orchestrator.erl ns_server/src/ns_orchestrator.erl

--- ns_server.orig/src/ns_orchestrator.erl 2015-10-08 11:31:21.412957000 +0200

+++ ns_server/src/ns_orchestrator.erl 2015-10-08 10:45:51.967739000 +0200

@@ -251,7 +251,7 @@

not_needed |

{error, {failed_nodes, [node()]}}

when UUID :: binary(),

- RecoveryMap :: dict().

+ RecoveryMap :: dict:dict().

start_recovery(Bucket) ->

wait_for_orchestrator(),

gen_fsm:sync_send_event(?SERVER, {start_recovery, Bucket}).

@@ -260,7 +260,7 @@

when Status :: [{bucket, bucket_name()} |

{uuid, binary()} |

{recovery_map, RecoveryMap}],

- RecoveryMap :: dict().

+ RecoveryMap :: dict:dict().

recovery_status() ->

case is_recovery_running() of

false ->

@@ -271,7 +271,7 @@

end.

-spec recovery_map(bucket_name(), UUID) -> bad_recovery | {ok, RecoveryMap}

- when RecoveryMap :: dict(),

+ when RecoveryMap :: dict:dict(),

UUID :: binary().

recovery_map(Bucket, UUID) ->

wait_for_orchestrator(),

@@ -1062,7 +1062,7 @@

{next_state, FsmState, State#janitor_state{remaining_buckets = NewBucketRequests}}

end.

--spec update_progress(dict()) -> ok.

+-spec update_progress(dict:dict()) -> ok.

update_progress(Progress) ->

gen_fsm:send_event(?SERVER, {update_progress, Progress}).

diff -r -u ns_server.orig/src/ns_replicas_builder.erl ns_server/src/ns_replicas_builder.erl

--- ns_server.orig/src/ns_replicas_builder.erl 2015-10-08 11:31:21.413763000 +0200

+++ ns_server/src/ns_replicas_builder.erl 2015-10-08 10:43:03.655761000 +0200

@@ -153,7 +153,7 @@

observe_wait_all_done_old_style_loop(Bucket, SrcNode, Sleeper, NewTapNames, SleepsSoFar+1)

end.

--spec filter_true_producers(list(), set(), binary()) -> [binary()].

+-spec filter_true_producers(list(), set:set(), binary()) -> [binary()].

filter_true_producers(PList, TapNamesSet, StatName) ->

[TapName

|| {<<"eq_tapq:replication_", Key/binary>>, <<"true">>} <- PList,

diff -r -u ns_server.orig/src/ns_vbucket_mover.erl ns_server/src/ns_vbucket_mover.erl

--- ns_server.orig/src/ns_vbucket_mover.erl 2015-10-08 11:31:21.415305000 +0200

+++ ns_server/src/ns_vbucket_mover.erl 2015-10-08 10:42:02.815008000 +0200

@@ -36,14 +36,14 @@

-export([inhibit_view_compaction/3]).

--type progress_callback() :: fun((dict()) -> any()).

+-type progress_callback() :: fun((dict:dict()) -> any()).

-record(state, {bucket::nonempty_string(),

disco_events_subscription::pid(),

- map::array(),

+ map::array:array(),

moves_scheduler_state,

progress_callback::progress_callback(),

- all_nodes_set::set(),

+ all_nodes_set::set:set(),

replication_type::bucket_replication_type()}).

%%

@@ -218,14 +218,14 @@

%% @private

%% @doc Convert a map array back to a map list.

--spec array_to_map(array()) -> vbucket_map().

+-spec array_to_map(array:array()) -> vbucket_map().

array_to_map(Array) ->

array:to_list(Array).

%% @private

%% @doc Convert a map, which is normally a list, into an array so that

%% we can randomly access the replication chains.

--spec map_to_array(vbucket_map()) -> array().

+-spec map_to_array(vbucket_map()) -> array:array().

map_to_array(Map) ->

array:fix(array:from_list(Map)).

diff -r -u ns_server.orig/src/path_config.erl ns_server/src/path_config.erl

--- ns_server.orig/src/path_config.erl 2015-10-08 11:31:21.415376000 +0200

+++ ns_server/src/path_config.erl 2015-10-08 10:38:48.687500000 +0200

@@ -53,7 +53,7 @@

filename:join(component_path(NameAtom), SubPath).

tempfile(Dir, Prefix, Suffix) ->

- {_, _, MicroSecs} = erlang:now(),

+ {_, _, MicroSecs} = erlang:timestamp(),

Pid = os:getpid(),

Filename = Prefix ++ integer_to_list(MicroSecs) ++ "_" ++

Pid ++ Suffix,

diff -r -u ns_server.orig/src/recoverer.erl ns_server/src/recoverer.erl

--- ns_server.orig/src/recoverer.erl 2015-10-08 11:31:21.415655000 +0200

+++ ns_server/src/recoverer.erl 2015-10-08 10:36:46.182185000 +0200

@@ -23,16 +23,16 @@

is_recovery_complete/1]).

-record(state, {bucket_config :: list(),

- recovery_map :: dict(),

- post_recovery_chains :: dict(),

- apply_map :: array(),

- effective_map :: array()}).

+ recovery_map :: dict:dict(),

+ post_recovery_chains :: dict:dict(),

+ apply_map :: array:array(),

+ effective_map :: array:array()}).

-spec start_recovery(BucketConfig) ->

{ok, RecoveryMap, {Servers, BucketConfig}, #state{}}

| not_needed

when BucketConfig :: list(),

- RecoveryMap :: dict(),

+ RecoveryMap :: dict:dict(),

Servers :: [node()].

start_recovery(BucketConfig) ->

NumVBuckets = proplists:get_value(num_vbuckets, BucketConfig),

@@ -92,7 +92,7 @@

effective_map=array:from_list(OldMap)}}

end.

--spec get_recovery_map(#state{}) -> dict().

+-spec get_recovery_map(#state{}) -> dict:dict().

get_recovery_map(#state{recovery_map=RecoveryMap}) ->

RecoveryMap.

@@ -205,7 +205,7 @@

-define(MAX_NUM_SERVERS, 50).

compute_recovery_map_test_() ->

- random:seed(now()),

+ random:seed(erlang:timestamp()),

{timeout, 100,

{inparallel,

diff -r -u ns_server.orig/src/remote_clusters_info.erl ns_server/src/remote_clusters_info.erl

--- ns_server.orig/src/remote_clusters_info.erl 2015-10-08 11:31:21.416143000 +0200

+++ ns_server/src/remote_clusters_info.erl 2015-10-08 10:37:57.095653000 +0200

@@ -121,10 +121,10 @@

{node, node(), remote_clusters_info_config_update_interval}, 10000)).

-record(state, {cache_path :: string(),

- scheduled_config_updates :: set(),

- remote_bucket_requests :: dict(),

- remote_bucket_waiters :: dict(),

- remote_bucket_waiters_trefs :: dict()}).

+ scheduled_config_updates :: set:set(),

+ remote_bucket_requests :: dict:dict(),

+ remote_bucket_waiters :: dict:dict(),

+ remote_bucket_waiters_trefs :: dict:dict()}).

start_link() ->

gen_server:start_link({local, ?MODULE}, ?MODULE, [], []).

diff -r -u ns_server.orig/src/ringbuffer.erl ns_server/src/ringbuffer.erl

--- ns_server.orig/src/ringbuffer.erl 2015-10-08 11:31:21.416440000 +0200

+++ ns_server/src/ringbuffer.erl 2015-10-08 10:33:36.063532000 +0200

@@ -18,7 +18,7 @@

-export([new/1, to_list/1, to_list/2, to_list/3, add/2]).

% Create a ringbuffer that can hold at most Size items.

--spec new(integer()) -> queue().

+-spec new(integer()) -> queue:queue().

new(Size) ->

queue:from_list([empty || _ <- lists:seq(1, Size)]).

@@ -26,15 +26,15 @@

% Convert the ringbuffer to a list (oldest items first).

-spec to_list(integer()) -> list().

to_list(R) -> to_list(R, false).

--spec to_list(queue(), W) -> list() when is_subtype(W, boolean());

- (integer(), queue()) -> list().

+-spec to_list(queue:queue(), W) -> list() when is_subtype(W, boolean());

+ (integer(), queue:queue()) -> list().

to_list(R, WithEmpties) when is_boolean(WithEmpties) ->

queue:to_list(to_queue(R));

% Get at most the N newest items from the given ringbuffer (oldest first).

to_list(N, R) -> to_list(N, R, false).

--spec to_list(integer(), queue(), boolean()) -> list().

+-spec to_list(integer(), queue:queue(), boolean()) -> list().

to_list(N, R, WithEmpties) ->

L = lists:reverse(queue:to_list(to_queue(R, WithEmpties))),

lists:reverse(case (catch lists:split(N, L)) of

@@ -43,14 +43,14 @@

end).

% Add an element to a ring buffer.

--spec add(term(), queue()) -> queue().

+-spec add(term(), queue:queue()) -> queue:queue().

add(E, R) ->

queue:in(E, queue:drop(R)).

% private

--spec to_queue(queue()) -> queue().

+-spec to_queue(queue:queue()) -> queue:queue().

to_queue(R) -> to_queue(R, false).

--spec to_queue(queue(), boolean()) -> queue().

+-spec to_queue(queue:queue(), boolean()) -> queue:queue().

to_queue(R, false) -> queue:filter(fun(X) -> X =/= empty end, R);

to_queue(R, true) -> R.

diff -r -u ns_server.orig/src/vbucket_map_mirror.erl ns_server/src/vbucket_map_mirror.erl

--- ns_server.orig/src/vbucket_map_mirror.erl 2015-10-08 11:31:21.417885000 +0200

+++ ns_server/src/vbucket_map_mirror.erl 2015-10-07 22:27:21.036638000 +0200

@@ -119,7 +119,7 @@

end).

-spec node_vbuckets_dict_or_not_present(bucket_name()) ->

- dict() | no_map | not_present.

+ dict:dict() | no_map | not_present.

node_vbuckets_dict_or_not_present(BucketName) ->

case ets:lookup(vbucket_map_mirror, BucketName) of

[] ->

diff -r -u ns_server.orig/src/vbucket_move_scheduler.erl ns_server/src/vbucket_move_scheduler.erl

--- ns_server.orig/src/vbucket_move_scheduler.erl 2015-10-08 11:31:21.418054000 +0200

+++ ns_server/src/vbucket_move_scheduler.erl 2015-10-07 22:26:10.523913000 +0200

@@ -128,7 +128,7 @@

backfills_limit :: non_neg_integer(),

moves_before_compaction :: non_neg_integer(),

total_in_flight = 0 :: non_neg_integer(),

- moves_left_count_per_node :: dict(), % node() -> non_neg_integer()

+ moves_left_count_per_node :: dict:dict(), % node() -> non_neg_integer()

moves_left :: [move()],

%% pending moves when current master is undefined For them

@@ -136,13 +136,13 @@

%% And that's first moves that we ever consider doing

moves_from_undefineds :: [move()],

- compaction_countdown_per_node :: dict(), % node() -> non_neg_integer()

- in_flight_backfills_per_node :: dict(), % node() -> non_neg_integer() (I.e. counts current moves)

- in_flight_per_node :: dict(), % node() -> non_neg_integer() (I.e. counts current moves)

- in_flight_compactions :: set(), % set of nodes

+ compaction_countdown_per_node :: dict:dict(), % node() -> non_neg_integer()

+ in_flight_backfills_per_node :: dict:dict(), % node() -> non_neg_integer() (I.e. counts current moves)

+ in_flight_per_node :: dict:dict(), % node() -> non_neg_integer() (I.e. counts current moves)

+ in_flight_compactions :: set:set(), % set of nodes

- initial_move_counts :: dict(),

- left_move_counts :: dict(),

+ initial_move_counts :: dict:dict(),

+ left_move_counts :: dict:dict(),

inflight_moves_limit :: non_neg_integer()

}).

diff -r -u ns_server.orig/src/xdc_vbucket_rep_xmem.erl ns_server/src/xdc_vbucket_rep_xmem.erl

--- ns_server.orig/src/xdc_vbucket_rep_xmem.erl 2015-10-08 11:31:21.419959000 +0200

+++ ns_server/src/xdc_vbucket_rep_xmem.erl 2015-10-07 22:24:35.829228000 +0200

@@ -134,7 +134,7 @@

end.

%% internal

--spec categorise_statuses_to_dict(list(), list()) -> {dict(), dict()}.

+-spec categorise_statuses_to_dict(list(), list()) -> {dict:dict(), dict:dict()}.

categorise_statuses_to_dict(Statuses, MutationsList) ->

{ErrorDict, ErrorKeys, _}

= lists:foldl(fun(Status, {DictAcc, ErrorKeyAcc, CountAcc}) ->

@@ -164,7 +164,7 @@

lists:reverse(Statuses)),

{ErrorDict, ErrorKeys}.

--spec lookup_error_dict(term(), dict()) -> integer().

+-spec lookup_error_dict(term(), dict:dict()) -> integer().

lookup_error_dict(Key, ErrorDict)->

case dict:find(Key, ErrorDict) of

error ->

@@ -173,7 +173,7 @@

V

end.

--spec convert_error_dict_to_string(dict()) -> list().

+-spec convert_error_dict_to_string(dict:dict()) -> list().

convert_error_dict_to_string(ErrorKeyDict) ->

StrHead = case dict:size(ErrorKeyDict) > 0 of

true ->

Next!

# gmake

[no errors]

There are no next error. Let's see the destination folder:

# ll install/

total 32

drwxr-xr-x 3 root wheel 1536 Oct 8 11:21 bin/

drwxr-xr-x 2 root wheel 512 Oct 8 11:21 doc/

drwxr-xr-x 5 root wheel 512 Oct 8 11:21 etc/

drwxr-xr-x 6 root wheel 1024 Oct 8 11:21 lib/

drwxr-xr-x 3 root wheel 512 Oct 8 11:21 man/

drwxr-xr-x 2 root wheel 512 Oct 8 11:21 samples/

drwxr-xr-x 3 root wheel 512 Oct 8 11:21 share/

drwxr-xr-x 3 root wheel 512 Oct 8 11:21 var/

Sucess. Couchbase is built.

Running the server

# bin/couchbase-server

bin/couchbase-server: Command not found.

What the hell is that file?

root@couchbasebsd:~/couchbase # head bin/couchbase-server

#! /bin/bash

#

# Copyright (c) 2010-2011, Couchbase, Inc.

# All rights reserved

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

Yum. Sweet bash. Let's dirty our system a bit:

# ln -s /usr/local/bin/bash /bin/bash

Let's try running the server again:

# bin/couchbase-server

Erlang/OTP 18 [erts-7.0.1] [source] [64-bit] [smp:2:2] [async-threads:16] [hipe] [kernel-poll:false]

Eshell V7.0.1 (abort with ^G)

Nothing complained. Let's see if the web UI is present.

# sockstat -4l | grep 8091

#

It's not.

What's in the log?

[user:critical,2015-10-08T11:42:24.599,ns_1@127.0.0.1:ns_server_sup<0.271.0>:menelaus_sup:start_link:51]Couchbase Server has failed to start on web port 8091 on node 'ns_1@127.0.0.1'. Perhaps another process has taken port 8091 already? If so, please stop that process first before trying again.

[ns_server:info,2015-10-08T11:42:24.600,ns_1@127.0.0.1:mb_master<0.319.0>:mb_master:terminate:299]Synchronously shutting down child mb_master_sup

Server could not start. Let's see more logs:

# /root/couchbase/install/bin/cbbrowse_logs

[...]

[error_logger:error,2015-10-08T11:57:36.860,ns_1@127.0.0.1:error_logger<0.6.0>:ale_error_logger_handler:do_log:203]

=========================SUPERVISOR REPORT=========================

Supervisor: {local,ns_ssl_services_sup}

Context: start_error

Reason: {bad_generate_cert_exit,1,<<>>}

Offender: [{pid,undefined},

{id,ns_ssl_services_setup},

{mfargs,{ns_ssl_services_setup,start_link,[]}},

{restart_type,permanent},

{shutdown,1000},

{child_type,worker}]

bad_generate_cert_exit? Let's execute that program ourselves:

# bin/generate_cert

ELF binary type "0" not known.

bin/generate_cert: Exec format error. Binary file not executable.

# file bin/generate_cert

bin/generate_cert: ELF 32-bit LSB executable, Intel 80386, version 1 (SYSV), statically linked, BuildID[md5/uuid]=48f74c5e6c624dfe8ecba6d8687f151b, not stripped

Nothing beats building some software on FreeBSD and ending up with Linux binaries.

Where is the source of that program?

# find . -name '*generate_cert*'

./ns_server/deps/generate_cert

./ns_server/deps/generate_cert/generate_cert.go

./ns_server/priv/i386-darwin-generate_cert

./ns_server/priv/i386-linux-generate_cert

./ns_server/priv/i386-win32-generate_cert.exe

./install/bin/generate_cert

Let's build it and replace the Linux one.

# cd ns_server/deps/generate_cert/

# go build

# file generate_cert

generate_cert: ELF 64-bit LSB executable, x86-64, version 1 (FreeBSD), dynamically linked, interpreter /libexec/ld-elf.so.1, not stripped

# cp generate_cert ../../../install/bin/

# cd ../gozip/

# go build

# cp gozip ../../../install/bin

Let's run the server again.

# # bin/couchbase-server

Erlang/OTP 18 [erts-7.0.1] [source] [64-bit] [smp:2:2] [async-threads:16] [hipe] [kernel-poll:false]

Eshell V7.0.1 (abort with ^G)

# sockstat -4l | grep 8091

root beam.smp 93667 39 tcp4 *:8091 *:*

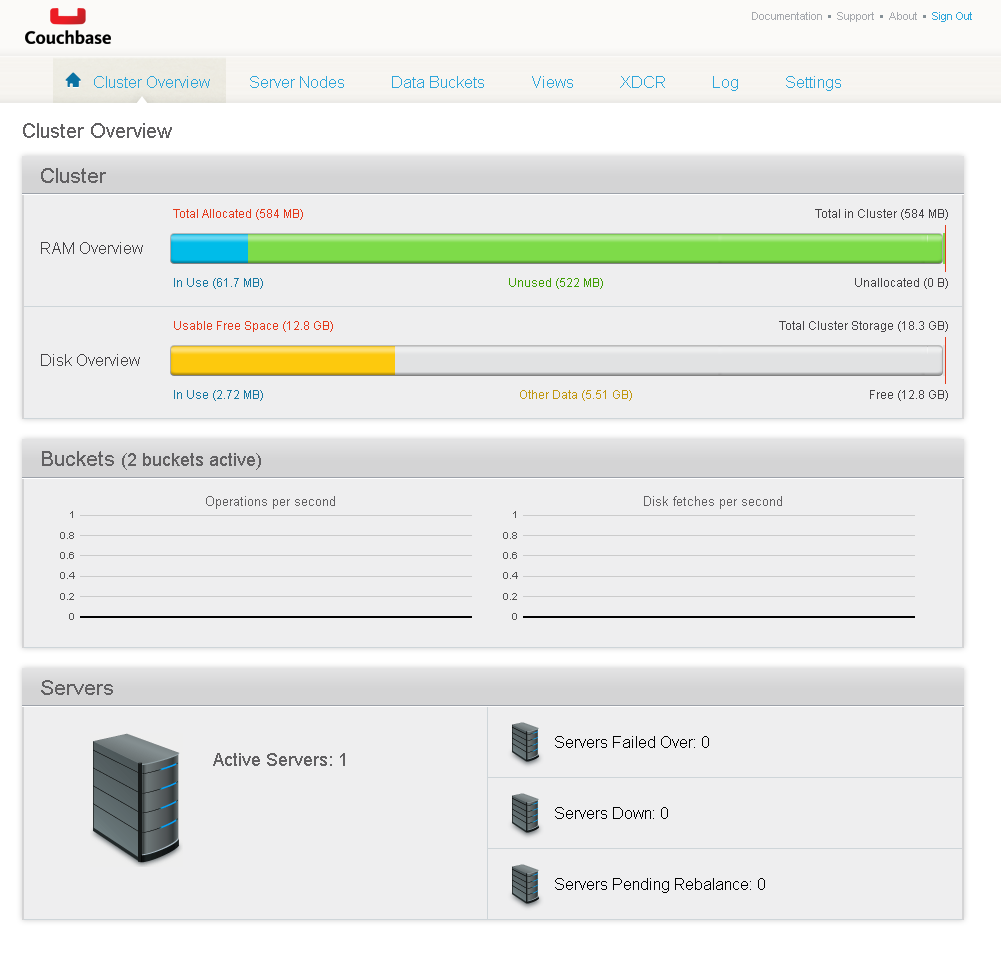

This time the web UI is present.

Let's follow the setup and create a bucket.

So far, everything seems to be working.

Interracting with the server via the CLI is also working.

# bin/couchbase-cli bucket-list -u Administrator -p abcd1234 -c 127.0.0.1

default

bucketType: membase

authType: sasl

saslPassword:

numReplicas: 1

ramQuota: 507510784

ramUsed: 31991104

test_bucket

bucketType: membase

authType: sasl

saslPassword:

numReplicas: 1

ramQuota: 104857600

ramUsed: 31991008

Conclusion

I obviously haven't tested every feature of the server, but as far as I demonstrated, it's perfectly capable of running on FreeBSD.